Micron HBM Designed into Leading AMD AI Platform

Rhea-AI Summary

Positive

- Integration with AMD's high-end AI GPU platform demonstrates Micron's strong industry position in HBM technology

- Partnership enables support for massive AI models with up to 520 billion parameters on a single GPU

- Solution delivers exceptional performance with up to 8 TB/s bandwidth and 161 PFLOPS at FP4 precision

- Product qualification achieved on multiple leading AI platforms, indicating strong market acceptance

Negative

- None.

Insights

Micron's HBM3E memory design win in AMD's MI350 AI GPUs strengthens its position in the high-margin AI accelerator memory market.

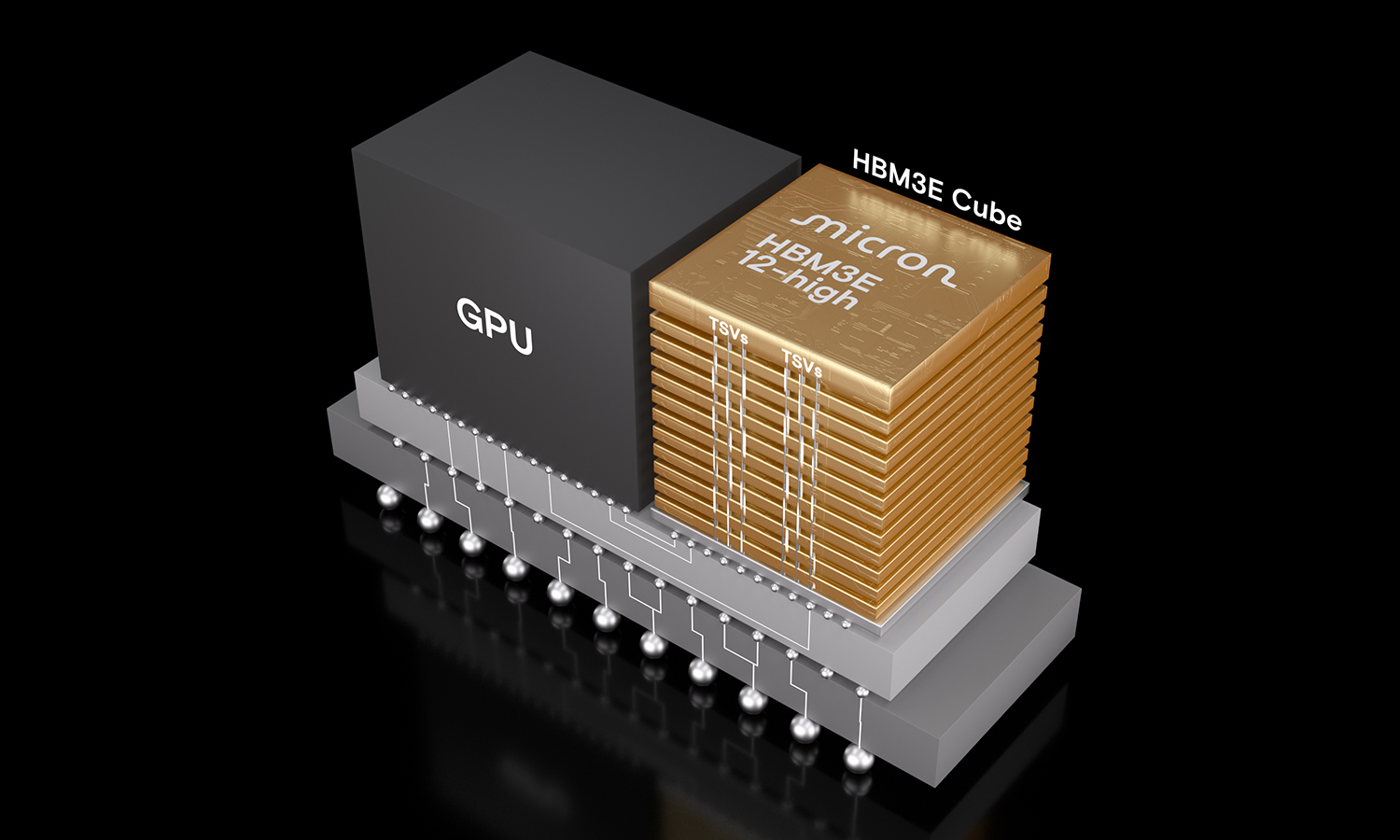

Micron's announcement represents a significant design win in the rapidly expanding AI accelerator market. The company's HBM3E 36GB 12-high memory being integrated into AMD's next-generation Instinct MI350 Series GPUs positions Micron favorably in the high-bandwidth memory segment – one of the semiconductor industry's most lucrative and technically challenging markets.

The technical specifications are particularly noteworthy. AMD's platforms will leverage 288GB of HBM3E memory capacity per GPU with up to 8 TB/s bandwidth, enabling support for AI models with up to 520 billion parameters on a single GPU. In full platform configurations, this scales to 2.3TB of HBM3E memory with theoretical performance reaching 161 PFLOPS at FP4 precision. These specifications represent the cutting edge of AI acceleration capabilities.

From a competitive perspective, Micron's qualification on multiple AI platforms suggests the company is successfully competing against Samsung and SK Hynix in the premium HBM market. This diversification beyond DRAM commodities toward higher-margin, specialized memory products strengthens Micron's business model and potentially improves gross margin profile.

The partnership also demonstrates Micron's manufacturing excellence, as HBM3E production requires advanced packaging technologies and precise manufacturing processes. The 12-high stack configuration mentioned is particularly challenging to produce at scale, indicating Micron has overcome significant technical hurdles.

For semiconductor investors, this partnership reinforces Micron's strategic positioning as AI workloads continue driving demand for high-performance memory solutions, potentially commanding premium pricing compared to standard memory products.

Micron high bandwidth memory (HBM3E 36GB 12-high) and the AMD Instinct™ MI350 Series GPUs and platforms support the pace of AI data center innovation and growth

A Media Snippet accompanying this announcement is available in this link.

BOISE, Idaho, June 12, 2025 (GLOBE NEWSWIRE) -- Micron Technology, Inc. (Nasdaq: MU) today announced the integration of its HBM3E 36GB 12-high offering into the upcoming AMD Instinct™ MI350 Series solutions. This collaboration highlights the critical role of power efficiency and performance in training large AI models, delivering high-throughput inference and handling complex HPC workloads such as data processing and computational modeling. Furthermore, it represents another significant milestone in HBM industry leadership for Micron, showcasing its robust execution and the value of its strong customer relationships.

Micron HBM3E 36GB 12-high solution brings industry-leading memory technology to AMD Instinct™ MI350 Series GPU platforms, providing outstanding bandwidth and lower power consumption.1 The AMD Instinct MI350 Series GPU platforms, built on AMD advanced CDNA 4 architecture, integrate 288GB of high-bandwidth HBM3E memory capacity, delivering up to 8 TB/s bandwidth for exceptional throughput. This immense memory capacity allows Instinct MI350 series GPUs to efficiently support AI models with up to 520 billion parameters—on a single GPU. In a full platform configuration, Instinct MI350 Series GPUs offers up to 2.3TB of HBM3E memory and achieves peak theoretical performance of up to 161 PFLOPS at FP4 precision, with leadership energy efficiency and scalability for high-density AI workloads. This tightly integrated architecture, combined with Micron’s power-efficient HBM3E, enables exceptional throughput for large language model training, inference and scientific simulation tasks—empowering data centers to scale seamlessly while maximizing compute performance per watt. This joint effort between Micron and AMD has enabled faster time to market for AI solutions.

“Our close working relationship and joint engineering efforts with AMD optimize compatibility of the Micron HBM3E 36GB 12-high product with the Instinct MI350 Series GPUs and platforms. Micron’s HBM3E industry leadership and technology innovations provide improved TCO benefits to end customers with high performance for demanding AI systems,” said Praveen Vaidyanathan, vice president and general manager of Cloud Memory Products at Micron.

“The Micron HBM3E 36GB 12-high product is instrumental in unlocking the performance and energy efficiency of AMD Instinct™ MI350 Series accelerators,” said Josh Friedrich, corporate vice president of AMD Instinct Product Engineering at AMD. “Our continued collaboration with Micron advances low-power, high-bandwidth memory that helps customers train larger AI models, speed inference and tackle complex HPC workloads.”

Micron HBM3E 36GB 12-high product is now qualified on multiple leading AI platforms. For more information on Micron’s HBM product portfolio, visit: High-bandwidth memory | Micron Technology Inc.

Additional Resources:

About Micron Technology, Inc.

Micron Technology, Inc. is an industry leader in innovative memory and storage solutions, transforming how the world uses information to enrich life for all. With a relentless focus on our customers, technology leadership, and manufacturing and operational excellence, Micron delivers a rich portfolio of high-performance DRAM, NAND, and NOR memory and storage products through our Micron® and Crucial® brands. Every day, the innovations that our people create fuel the data economy, enabling advances in artificial intelligence (AI) and compute-intensive applications that unleash opportunities — from the data center to the intelligent edge and across the client and mobile user experience. To learn more about Micron Technology, Inc. (Nasdaq: MU), visit micron.com.

© 2025 Micron Technology, Inc. All rights reserved. Information, products, and/or specifications are subject to change without notice. Micron, the Micron logo, and all other Micron trademarks are the property of Micron Technology, Inc. All other trademarks are the property of their respective owners.

Micron Product and Technology Communications Contact:

Mengxi Liu Evensen

+1 (408) 444-2276

productandtechnology@micron.com

Micron Investor Relations Contact

Satya Kumar

+1 (408) 450-6199

satyakumar@micron.com

____________________________

1 Data rate testing estimates are based on shmoo plot of pin speed performed in a manufacturing test environment. Power and performance estimates are based on simulation results of workload uses cases.