Supermicro Expands NVIDIA Blackwell Portfolio with New 4U and 2-OU (OCP) Liquid-Cooled NVIDIA HGX B300 Solutions Ready for High-Volume Shipment

Rhea-AI Summary

Supermicro (NASDAQ: SMCI) announced Dec 9, 2025 the commercial availability of new liquid-cooled NVIDIA HGX B300 systems in two form factors: a 4U Front I/O 19-inch EIA rack design and a compact 2-OU (OCP) 21-inch Open Rack V3 design.

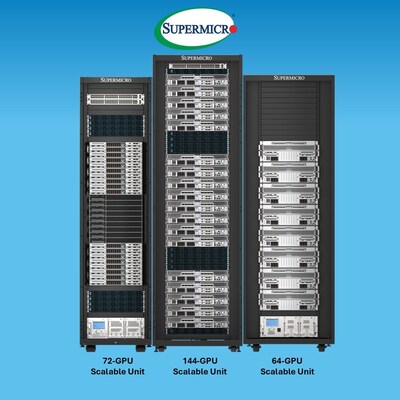

Key facts: the 2-OU OCP platform supports up to 144 GPUs per rack (18 nodes), the 4U EIA option enables up to 64 GPUs per rack, each system can house NVIDIA Blackwell Ultra GPUs at up to 1,100W TDP, and systems deliver 2.1TB HBM3e GPU memory per system. DLC-2 liquid cooling captures up to 98% of system heat and the designs target up to 40% power savings and fabric throughput up to 800Gb/s.

Positive

- Up to 144 GPUs per rack in 2-OU OCP ORV3

- DLC-2 captures up to 98% of system heat

- 2.1TB HBM3e GPU memory per system

- Network throughput up to 800Gb/s with ConnectX-8 SuperNICs

- Designed for up to 40% data center power savings

Negative

- GPUs specified at up to 1,100W TDP each

- Rack-scale deployment references 1.8MW in-row CDUs requirement

News Market Reaction

On the day this news was published, SMCI declined 0.34%, reflecting a mild negative market reaction.

Data tracked by StockTitan Argus on the day of publication.

Key Figures

Market Reality Check

Peers on Argus

SMCI was up 1.96% while key peers were mixed: HPQ -2.6%, PSTG -0.41%, WDC -0.67%, STX +1.57%, LOGI +0.08%, indicating a more stock-specific move.

Historical Context

| Date | Event | Sentiment | Move | Catalyst |

|---|---|---|---|---|

| Nov 20 | Investor conferences | Positive | -6.4% | Announced participation in several December technology and AI investor conferences. |

| Nov 19 | AI server launch | Positive | -3.4% | Launched new 10U air-cooled AI server featuring AMD Instinct MI355X GPUs. |

| Nov 18 | AI factory clusters | Positive | +2.4% | Announced turnkey NVIDIA-based AI factory cluster solutions for scalable deployment. |

| Nov 17 | HPC/AI showcase | Positive | +2.4% | Showcased future HPC clusters and liquid-cooled AI infrastructure at Supercomputing 2025. |

| Nov 04 | Earnings release | Positive | -6.6% | Reported Q1 FY2026 results and reiterated at least $36B full-year revenue outlook. |

Recent history shows several instances where positive AI or earnings news was followed by negative price reactions, suggesting a tendency to sell into good news.

Over the past months, SMCI reported Q1 FY2026 results with $5.0B net sales and reiterated at least $36B revenue expectations, yet shares fell after that release. The company has repeatedly expanded its AI hardware portfolio, including AMD Instinct MI355X systems and NVIDIA Blackwell-based AI factory clusters, and highlighted liquid-cooled infrastructure at Supercomputing 2025. Despite these product and AI factory announcements, price reactions have been mixed, with both gains around +2.35% and pullbacks exceeding -6% on ostensibly positive updates.

Market Pulse Summary

This announcement expands SMCI’s NVIDIA Blackwell portfolio with liquid‑cooled HGX B300 systems that reach up to 144 GPUs per rack and a SuperCluster design totaling 1,152 GPUs. It builds on prior AI factory and liquid‑cooling initiatives aimed at performance per watt and faster time‑to‑online. Investors may track adoption of these 2.1TB HBM3e systems, the impact of up to 40 percent power savings, and how this complements previously disclosed multi‑billion‑dollar Blackwell order visibility.

Key Terms

direct liquid-cooling technical

OCP Open Rack V3 technical

InfiniBand technical

NVIDIA-Certified Systems technical

liquid-cooling technical

coolant distribution units technical

AI-generated analysis. Not financial advice.

- Introducing 4U and 2-OU (OCP) liquid-cooled NVIDIA HGX B300 systems for high-density hyperscale and AI factory deployments, supported by Supermicro Data Center Building Block Solutions® with DLC-2 and DLC technology, respectively

- 4U liquid-cooled NVIDIA HGX B300 systems designed for standard 19-inch EIA racks with up to 64 GPUs per rack, capturing up to

98% of system heat through DLC-2 (Direct Liquid-Cooling) technology - Compact and power-efficient 2-OU (OCP) NVIDIA HGX B300 8-GPU system designed for 21-inch OCP Open Rack V3 (ORV3) specification with up to 144 GPUs in a single rack

"With AI infrastructure demand accelerating globally, our new liquid-cooled NVIDIA HGX B300 systems deliver the performance density and energy efficiency that hyperscalers and AI factories need today," said Charles Liang, president and CEO of Supermicro. "We're now offering the industry's most compact NVIDIA HGX B300 solutions—achieving up to 144 GPUs in a single rack—while reducing power consumption and cooling costs through our proven direct liquid-cooling technology. Through our DCBBS, this is how Supermicro enables our customers to deploy AI at scale: faster time-to-market, maximum performance per watt, and end-to-end integration from design to deployment."

For more information, please visit https://www.supermicro.com/en/accelerators/nvidia

The 2-OU (OCP) liquid-cooled NVIDIA HGX B300 system, built to the 21-inch OCP Open Rack V3 (ORV3) specification, enables up to 144 GPUs per rack to deliver maximum GPU density for hyperscale and cloud providers requiring space-efficient racks without compromising serviceability. The rack-scale design features blind-mate manifold connections, modular GPU/CPU tray architecture, and state-of-the-art component liquid cooling solutions. The system propels AI workloads with eight NVIDIA Blackwell Ultra GPUs at up to 1,100W TDP each, while dramatically reducing rack footprint and power consumption. A single ORV3 rack supports up to 18 nodes with 144 GPUs total, scaling seamlessly with NVIDIA Quantum-X800 InfiniBand switches and Supermicro's 1.8MW in-row coolant distribution units (CDUs). Combined, eight NVIDIA HGX B300 compute racks, three NVIDIA Quantum-X800 InfiniBand networking racks, and two Supermicro in-row CDUs form a SuperCluster scalable unit with 1,152 GPUs.

Complementing the 2-OU (OCP) model, the 4U Front I/O HGX B300 Liquid-Cooled System offers the same compute performance in a traditional 19-inch EIA rack form factor for large-scale AI factory deployments. The 4U system leverages Supermicro's DLC-2 technology to capture up to

Supermicro NVIDIA HGX B300 systems unlock substantial performance speedups, with 2.1TB of HBM3e GPU memory per system to handle larger model sizes at the system level. Above all, both the 2-OU (OCP) and 4U platforms deliver significant performance gains at the cluster level by doubling compute fabric network throughput up to 800Gb/s via integrated NVIDIA ConnectX®-8 SuperNICs when used with NVIDIA Quantum-X800 InfiniBand or NVIDIA Spectrum-4 Ethernet. These improvements accelerate heavy AI workloads such as agentic AI applications, foundation model training, and multimodal large scale inference in AI factories.

Supermicro developed these platforms to address key customer requirements for TCO, serviceability, and efficiency. With the DLC-2 technology stack, data centers can achieve up to 40 percent power savings1, reduce water consumption through 45°C warm water operation and eliminate chilled water and compressors in data centers. Supermicro DCBBS delivers the new systems as fully validated, tested racks ready as L11 and L12 solutions before shipment, accelerating time-to-online for hyperscale, enterprise, and federal customers.

These new systems expand Supermicro's broad portfolio of NVIDIA Blackwell platforms — including the NVIDIA GB300 NVL72, NVIDIA HGX B200, and NVIDIA RTX PRO 6000 Blackwell Server Edition. Each of these NVIDIA-Certified Systems from Supermicro are tested to validate optimal performance for a wide range of AI applications and use cases – together with NVIDIA networking and NVIDIA AI software, including NVIDIA AI Enterprise and NVIDIA Run:ai. This provides customers with flexibility to build AI infrastructure that scales from a single node to full-stack AI factories.

1https://www.supermicro.com/en/solutions/liquid-cooling

About Super Micro Computer, Inc.

Supermicro (NASDAQ: SMCI) is a global leader in Application-Optimized Total IT Solutions. Founded and operating in

Supermicro, Server Building Block Solutions, and We Keep IT Green are trademarks and/or registered trademarks of Super Micro Computer, Inc.

All other brands, names, and trademarks are the property of their respective owners.

![]() View original content to download multimedia:https://www.prnewswire.com/news-releases/supermicro-expands-nvidia-blackwell-portfolio-with-new-4u-and-2-ou-ocp-liquid-cooled-nvidia-hgx-b300-solutions-ready-for-high-volume-shipment-302637056.html

View original content to download multimedia:https://www.prnewswire.com/news-releases/supermicro-expands-nvidia-blackwell-portfolio-with-new-4u-and-2-ou-ocp-liquid-cooled-nvidia-hgx-b300-solutions-ready-for-high-volume-shipment-302637056.html

SOURCE Super Micro Computer, Inc.